OWLEN

Terminal-native assistant for running local language models with a comfortable TUI.

Alpha Status

- This project is currently in alpha (v0.1.8) and under active development.

- Core features are functional but expect occasional bugs and missing polish.

- Breaking changes may occur between releases as we refine the API.

- Feedback, bug reports, and contributions are very welcome!

What Is OWLEN?

OWLEN is a Rust-powered, terminal-first interface for interacting with local large language models. It provides a responsive chat workflow that runs against Ollama with a focus on developer productivity, vim-style navigation, and seamless session management—all without leaving your terminal.

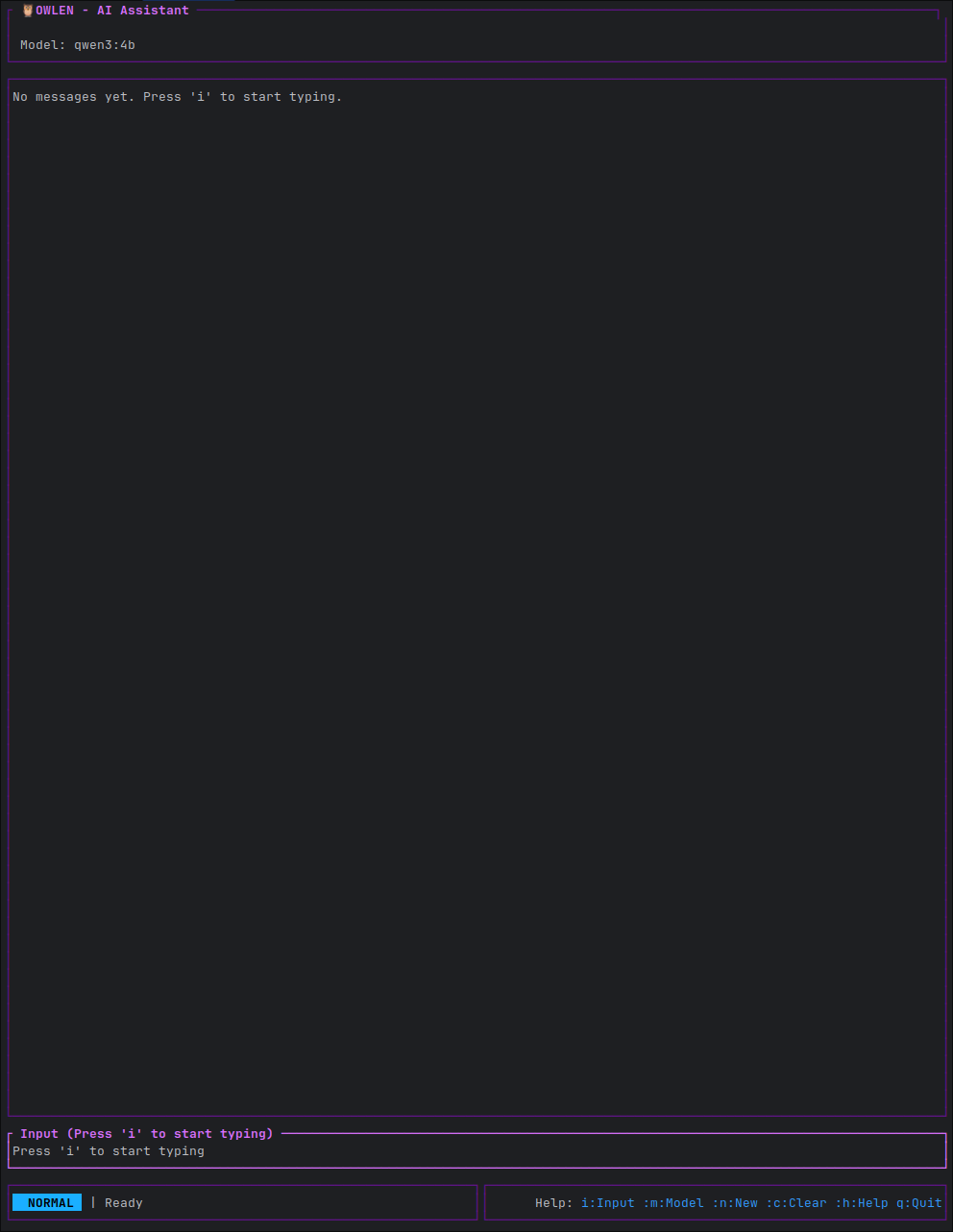

Screenshots

Initial Layout

The OWLEN interface features a clean, multi-panel layout with vim-inspired navigation. See more screenshots in the images/ directory including:

- Full chat conversations (

chat_view.png) - Help menu (

help.png) - Model selection (

model_select.png) - Visual selection mode (

select_mode.png)

Features

Chat Client (owlen)

- Vim-style Navigation - Normal, editing, visual, and command modes

- Streaming Responses - Real-time token streaming from Ollama

- Multi-Panel Interface - Separate panels for chat, thinking content, and input

- Advanced Text Editing - Multi-line input with

tui-textarea, history navigation - Visual Selection & Clipboard - Yank/paste text across panels

- Flexible Scrolling - Half-page, full-page, and cursor-based navigation

- Model Management - Interactive model and provider selection (press

m) - Session Persistence - Save and load conversations to/from disk

- AI-Generated Descriptions - Automatic short summaries for saved sessions

- Session Management - Start new conversations, clear history, browse saved sessions

- Thinking Mode Support - Dedicated panel for extended reasoning content

- Bracketed Paste - Safe paste handling for multi-line content

Code Client (owlen-code) [Experimental]

- All chat client features

- Optimized system prompt for programming assistance

- Foundation for future code-specific features

Core Infrastructure

- Modular Architecture - Separated core logic, TUI components, and providers

- Provider System - Extensible provider trait (currently: Ollama)

- Session Controller - Unified conversation and state management

- Configuration Management - TOML-based config with sensible defaults

- Message Formatting - Markdown rendering, thinking content extraction

- Async Runtime - Built on Tokio for efficient streaming

Getting Started

Prerequisites

- Rust 1.75+ and Cargo (

rustuprecommended) - A running Ollama instance with at least one model pulled

(defaults to

http://localhost:11434) - A terminal that supports 256 colors

Clone and Build

git clone https://somegit.dev/Owlibou/owlen.git

cd owlen

cargo build --release

Run the Chat Client

Make sure Ollama is running, then launch:

./target/release/owlen

# or during development:

cargo run --bin owlen

(Optional) Try the Code Client

The coding-focused TUI is experimental:

cargo build --release --bin owlen-code --features code-client

./target/release/owlen-code

Using the TUI

Mode System (Vim-inspired)

Normal Mode (default):

i/Enter- Enter editing modea- Append (move right and enter editing mode)A- Append at end of lineI- Insert at start of lineo- Insert new line belowO- Insert new line abovev- Enter visual mode (text selection):- Enter command modeh/j/k/l- Navigate left/down/up/rightw/b/e- Word navigation0/$- Jump to line start/endgg- Jump to topG- Jump to bottomCtrl-d/u- Half-page scrollCtrl-f/b- Full-page scrollTab- Cycle focus between panelsp- Paste from clipboarddd- Clear input bufferq- Quit

Editing Mode:

Esc- Return to normal modeEnter- Send message and return to normal modeCtrl-J/Shift-Enter- Insert newlineCtrl-↑/↓- Navigate input history- Paste events handled automatically

Visual Mode:

j/k/h/l- Extend selectionw/b/e- Word-based selectiony- Yank (copy) selectiond- Cut selection (Input panel only)Esc- Cancel selection

Command Mode:

:q/:quit- Quit application:c/:clear- Clear conversation:m/:model- Open model selector:n/:new- Start new conversation:h/:help- Show help:save [name]/:w [name]- Save current conversation:load/:open- Browse and load saved sessions:sessions/:ls- List saved sessions

Session Browser (accessed via :load or :sessions):

j/k/↑/↓- Navigate sessionsEnter- Load selected sessiond- Delete selected sessionEsc- Close browser

Panel Management

- Three panels: Chat, Thinking, and Input

Tab/Shift-Tab- Cycle focus forward/backward- Focused panel receives scroll and navigation commands

- Thinking panel appears when extended reasoning is available

Configuration

OWLEN stores configuration in ~/.config/owlen/config.toml. The file is created

on first run and can be edited to customize behavior:

[general]

default_model = "llama3.2:latest"

default_provider = "ollama"

enable_streaming = true

project_context_file = "OWLEN.md"

[providers.ollama]

provider_type = "ollama"

base_url = "http://localhost:11434"

timeout = 300

Storage Settings

Sessions are saved to platform-specific directories by default:

- Linux:

~/.local/share/owlen/sessions - Windows:

%APPDATA%\owlen\sessions - macOS:

~/Library/Application Support/owlen/sessions

You can customize this in your config:

[storage]

# conversation_dir = "~/custom/path" # Optional: override default location

max_saved_sessions = 25

generate_descriptions = true # AI-generated summaries for saved sessions

Configuration is automatically saved when you change models or providers.

Repository Layout

owlen/

├── crates/

│ ├── owlen-core/ # Core types, session management, shared UI components

│ ├── owlen-ollama/ # Ollama provider implementation

│ ├── owlen-tui/ # TUI components (chat_app, code_app, rendering)

│ └── owlen-cli/ # Binary entry points (owlen, owlen-code)

├── LICENSE # AGPL-3.0 License

├── Cargo.toml # Workspace configuration

└── README.md

Architecture Highlights

- owlen-core: Provider-agnostic core with session controller, UI primitives (AutoScroll, InputMode, FocusedPanel), and shared utilities

- owlen-tui: Ratatui-based UI implementation with vim-style modal editing

- Separation of Concerns: Clean boundaries between business logic, presentation, and provider implementations

Development

Building

# Debug build

cargo build

# Release build

cargo build --release

# Build with all features

cargo build --all-features

# Run tests

cargo test

# Check code

cargo clippy

cargo fmt

Development Notes

- Standard Rust workflows apply (

cargo fmt,cargo clippy,cargo test) - Codebase uses async Rust (

tokio) for event handling and streaming - Configuration is cached in

~/.config/owlen(wipe to reset) - UI components are extensively tested in

owlen-core/src/ui.rs

Roadmap

Completed ✓

- Streaming responses with real-time display

- Autoscroll and viewport management

- Push user message before loading LLM response

- Thinking mode support with dedicated panel

- Vim-style modal editing (Normal, Visual, Command modes)

- Multi-panel focus management

- Text selection and clipboard functionality

- Comprehensive keyboard navigation

- Bracketed paste support

In Progress

- Session persistence (save/load conversations)

- Theming options and color customization

- Enhanced configuration UX (in-app settings)

- Conversation export (Markdown, JSON, plain text)

Planned

- Code Client Enhancement

- In-project code navigation

- Syntax highlighting for code blocks

- File tree browser integration

- Project-aware context management

- Code snippets and templates

- Additional LLM Providers

- OpenAI API support

- Anthropic Claude support

- Local model providers (llama.cpp, etc.)

- Advanced Features

- Conversation search and filtering

- Multi-session management

- Export conversations (Markdown, JSON)

- Custom keybindings

- Plugin system

Contributing

Contributions are welcome! Here's how to get started:

- Fork the repository

- Create a feature branch (

git checkout -b feature/amazing-feature) - Make your changes and add tests

- Run

cargo fmtandcargo clippy - Commit your changes (

git commit -m 'Add amazing feature') - Push to the branch (

git push origin feature/amazing-feature) - Open a Pull Request

Please open an issue first for significant changes to discuss the approach.

License

This project is licensed under the GNU Affero General Public License v3.0 (AGPL-3.0) - see the LICENSE file for details.

Acknowledgments

Built with:

- ratatui - Terminal UI framework

- crossterm - Cross-platform terminal manipulation

- tokio - Async runtime

- Ollama - Local LLM runtime

Status: Alpha v0.1.0 | License: AGPL-3.0 | Made with Rust 🦀