Completes Phase 4 (Agentic Loop with ReAct), Phase 7 (Code Execution), and Phase 8 (Prompt Server) as specified in the implementation plan. **Phase 4: Agentic Loop with ReAct Pattern (agent.rs - 398 lines)** - Complete AgentExecutor with reasoning loop - LlmResponse enum: ToolCall, FinalAnswer, Reasoning - ReAct parser supporting THOUGHT/ACTION/ACTION_INPUT/FINAL_ANSWER - Tool discovery and execution integration - AgentResult with iteration tracking and message history - Integration with owlen-agent CLI binary and TUI **Phase 7: Code Execution with Docker Sandboxing** *Sandbox Module (sandbox.rs - 255 lines):* - Docker-based execution using bollard - Resource limits: 512MB memory, 50% CPU - Network isolation (no network access) - Timeout handling (30s default) - Container auto-cleanup - Support for Rust, Node.js, Python environments *Tool Suite (tools.rs - 410 lines):* - CompileProjectTool: Build projects with auto-detection - RunTestsTool: Execute test suites with optional filters - FormatCodeTool: Run formatters (rustfmt/prettier/black) - LintCodeTool: Run linters (clippy/eslint/pylint) - All tools support check-only and auto-fix modes *MCP Server (lib.rs - 183 lines):* - Full JSON-RPC protocol implementation - Tool registry with dynamic dispatch - Initialize/tools/list/tools/call support **Phase 8: Prompt Server with YAML & Handlebars** *Prompt Server (lib.rs - 405 lines):* - YAML-based template storage in ~/.config/owlen/prompts/ - Handlebars 6.0 template engine integration - PromptTemplate with metadata (name, version, mode, description) - Four MCP tools: - get_prompt: Retrieve template by name - render_prompt: Render with Handlebars variables - list_prompts: List all available templates - reload_prompts: Hot-reload from disk *Default Templates:* - chat_mode_system.yaml: ReAct prompt for chat mode - code_mode_system.yaml: ReAct prompt with code tools **Configuration & Integration:** - Added Agent module to owlen-core - Updated owlen-agent binary to use new AgentExecutor API - Updated TUI to integrate with agent result structure - Added error handling for Agent variant **Dependencies Added:** - bollard 0.17 (Docker API) - handlebars 6.0 (templating) - serde_yaml 0.9 (YAML parsing) - tempfile 3.0 (temporary directories) - uuid 1.0 with v4 feature **Tests:** - mode_tool_filter.rs: Tool filtering by mode - prompt_server.rs: Prompt management tests - Sandbox tests (Docker-dependent, marked #[ignore]) All code compiles successfully and follows project conventions. 🤖 Generated with [Claude Code](https://claude.com/claude-code) Co-Authored-By: Claude <noreply@anthropic.com>

OWLEN

Terminal-native assistant for running local language models with a comfortable TUI.

What Is OWLEN?

OWLEN is a Rust-powered, terminal-first interface for interacting with local large language models. It provides a responsive chat workflow that runs against Ollama with a focus on developer productivity, vim-style navigation, and seamless session management—all without leaving your terminal.

Alpha Status

This project is currently in alpha and under active development. Core features are functional, but expect occasional bugs and breaking changes. Feedback, bug reports, and contributions are very welcome!

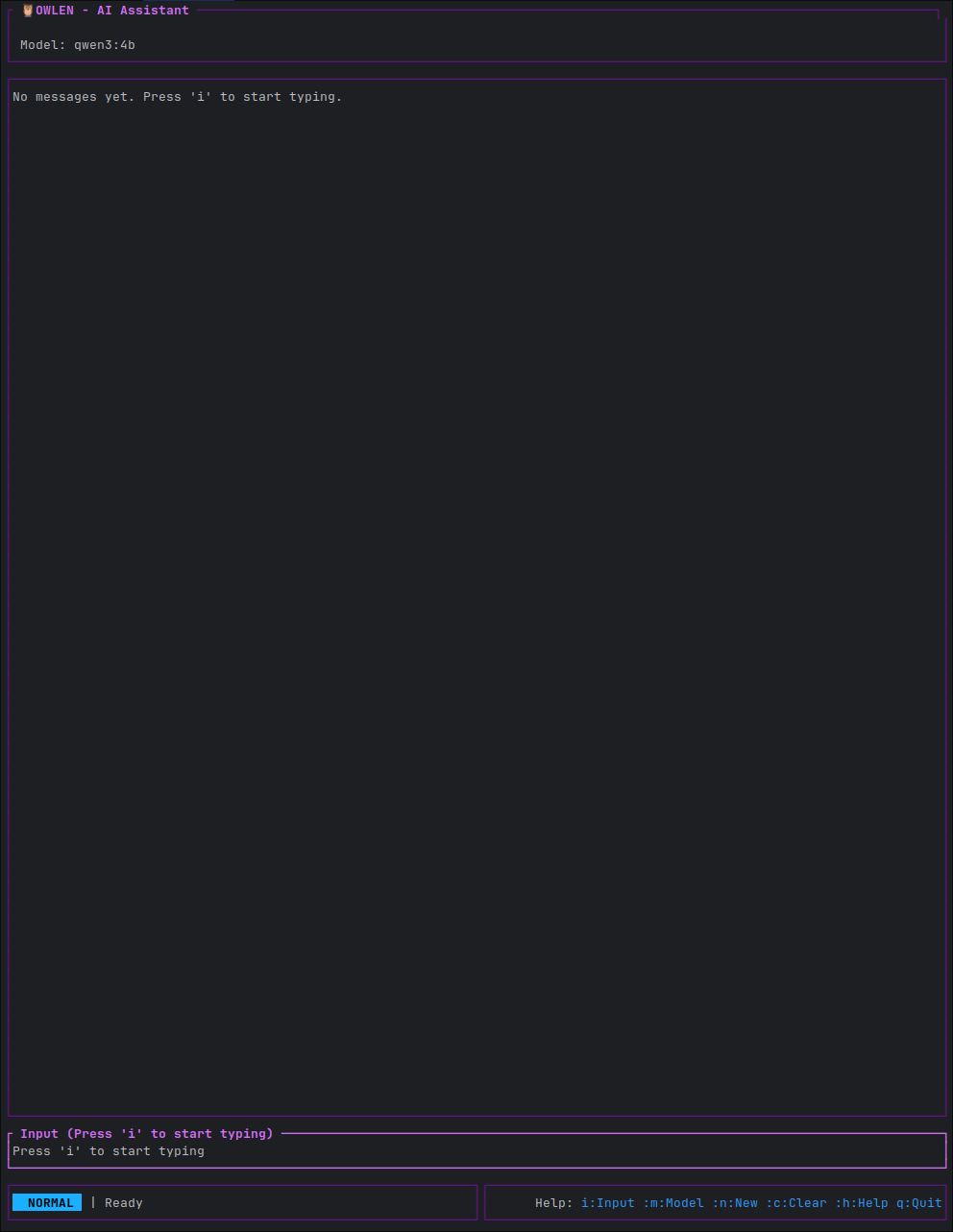

Screenshots

The OWLEN interface features a clean, multi-panel layout with vim-inspired navigation. See more screenshots in the images/ directory.

Features

- Vim-style Navigation: Normal, editing, visual, and command modes.

- Streaming Responses: Real-time token streaming from Ollama.

- Advanced Text Editing: Multi-line input, history, and clipboard support.

- Session Management: Save, load, and manage conversations.

- Theming System: 10 built-in themes and support for custom themes.

- Modular Architecture: Extensible provider system (currently Ollama).

Getting Started

Prerequisites

- Rust 1.75+ and Cargo.

- A running Ollama instance.

- A terminal that supports 256 colors.

Installation

Linux & macOS

The recommended way to install on Linux and macOS is to clone the repository and install using cargo.

git clone https://github.com/Owlibou/owlen.git

cd owlen

cargo install --path crates/owlen-cli

Note for macOS: While this method works, official binary releases for macOS are planned for the future.

Windows

The Windows build has not been thoroughly tested yet. Installation is possible via the same cargo install method, but it is considered experimental at this time.

Running OWLEN

Make sure Ollama is running, then launch the application:

owlen

If you built from source without installing, you can run it with:

./target/release/owlen

Using the TUI

OWLEN uses a modal, vim-inspired interface. Press ? in Normal mode to view the help screen with all keybindings.

- Normal Mode: Navigate with

h/j/k/l,w/b,gg/G. - Editing Mode: Enter with

iora. Send messages withEnter. - Command Mode: Enter with

:. Access commands like:quit,:save,:theme.

Documentation

For more detailed information, please refer to the following documents:

- CONTRIBUTING.md: Guidelines for contributing to the project.

- CHANGELOG.md: A log of changes for each version.

- docs/architecture.md: An overview of the project's architecture.

- docs/troubleshooting.md: Help with common issues.

- docs/provider-implementation.md: A guide for adding new providers.

Configuration

OWLEN stores its configuration in ~/.config/owlen/config.toml. This file is created on the first run and can be customized. You can also add custom themes in ~/.config/owlen/themes/.

See the themes/README.md for more details on theming.

Roadmap

We are actively working on enhancing the code client, adding more providers (OpenAI, Anthropic), and improving the overall user experience. See the Roadmap section in the old README for more details.

Contributing

Contributions are highly welcome! Please see our Contributing Guide for details on how to get started, including our code style, commit conventions, and pull request process.

License

This project is licensed under the GNU Affero General Public License v3.0. See the LICENSE file for details.